- Core Architecture: Uses a DiT (Diffusion Transformer) architecture similar to Sora, effectively fusing text, image, and motion information to improve consistency, quality, and alignment between generated video frames. A unified full-attention mechanism enables multi-view camera transitions while ensuring subject consistency.

- 3D VAE: The custom 3D VAE compresses videos into a compact latent space, making image-to-video generation more efficient.

- Superior Image-Video-Text Alignment: Utilizing MLLM text encoders that excel in both image and video generation, better following text instructions, capturing details, and performing complex reasoning.

Common Models for All Workflows

The following models are used in both Text-to-Video and Image-to-Video workflows. Please download and save them to the specified directories: Storage location:Hunyuan Text-to-Video Workflow

Hunyuan Text-to-Video was open-sourced in December 2024, supporting 5-second short video generation through natural language descriptions in both Chinese and English.1. Workflow

Download the image below and drag it into ComfyUI to load the workflow:

2. Manual Models Installation

Download hunyuan_video_t2v_720p_bf16.safetensors and save it to theComfyUI/models/diffusion_models folder.

Ensure you have all these model files in the correct locations:

3. Steps to Run the Workflow

- Ensure the

DualCLIPLoadernode has loaded these models:- clip_name1: clip_l.safetensors

- clip_name2: llava_llama3_fp8_scaled.safetensors

- Ensure the

Load Diffusion Modelnode has loadedhunyuan_video_t2v_720p_bf16.safetensors - Ensure the

Load VAEnode has loadedhunyuan_video_vae_bf16.safetensors - Click the

Queuebutton or use the shortcutCtrl(cmd) + Enterto run the workflow

Hunyuan Image-to-Video Workflow

Hunyuan Image-to-Video model was open-sourced on March 6, 2025, based on the HunyuanVideo framework. It transforms static images into smooth, high-quality videos and also provides LoRA training code to customize special video effects like hair growth, object transformation, etc. Currently, the Hunyuan Image-to-Video model has two versions:- v1 “concat”: Better motion fluidity but less adherence to the image guidance

- v2 “replace”: Updated the day after v1, with better image guidance but seemingly less dynamic compared to v1

v1 “concat”

v2 “replace”

Shared Model for v1 and v2 Versions

Download the following file and save it to theComfyUI/models/clip_vision directory:

V1 “concat” Image-to-Video Workflow

1. Workflow and Asset

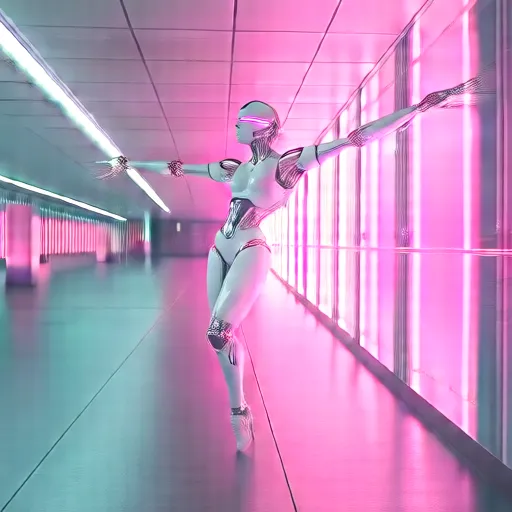

Download the workflow image below and drag it into ComfyUI to load the workflow: Download the image below, which we’ll use as the starting frame for the image-to-video generation:

Download the image below, which we’ll use as the starting frame for the image-to-video generation:

2. Related models manual installation

Ensure you have all these model files in the correct locations:3. Steps to Run the Workflow

- Ensure that

DualCLIPLoaderhas loaded these models:- clip_name1: clip_l.safetensors

- clip_name2: llava_llama3_fp8_scaled.safetensors

- Ensure that

Load CLIP Visionhas loadedllava_llama3_vision.safetensors - Ensure that

Load Image Modelhas loadedhunyuan_video_image_to_video_720p_bf16.safetensors - Ensure that

Load VAEhas loadedvae_name: hunyuan_video_vae_bf16.safetensors - Ensure that

Load Diffusion Modelhas loadedhunyuan_video_image_to_video_720p_bf16.safetensors - Click the

Queuebutton or use the shortcutCtrl(cmd) + Enterto run the workflow

v2 “replace” Image-to-Video Workflow

The v2 workflow is essentially the same as the v1 workflow. You just need to download the replace model and use it in theLoad Diffusion Model node.

1. Workflow and Asset

Download the workflow image below and drag it into ComfyUI to load the workflow: Download the image below, which we’ll use as the starting frame for the image-to-video generation:

Download the image below, which we’ll use as the starting frame for the image-to-video generation:

2. Related models manual installation

Ensure you have all these model files in the correct locations:3. Steps to Run the Workflow

- Ensure the

DualCLIPLoadernode has loaded these models:- clip_name1: clip_l.safetensors

- clip_name2: llava_llama3_fp8_scaled.safetensors

- Ensure the

Load CLIP Visionnode has loadedllava_llama3_vision.safetensors - Ensure the

Load Image Modelnode has loadedhunyuan_video_image_to_video_720p_bf16.safetensors - Ensure the

Load VAEnode has loadedhunyuan_video_vae_bf16.safetensors - Ensure the

Load Diffusion Modelnode has loadedhunyuan_video_v2_replace_image_to_video_720p_bf16.safetensors - Click the

Queuebutton or use the shortcutCtrl(cmd) + Enterto run the workflow

Try it yourself

Here are some images and prompts we provide. Based on that content or make an adjustment to create your own video.