- Scene Complexity: Complex scenes require multiple control conditions working together

- Fine-grained Control: By adjusting the strength parameter of each ControlNet, you can precisely control the degree of influence for each part

- Complementary Effects: Different types of ControlNets can complement each other, compensating for the limitations of single controls

- Creative Expression: Combining different controls can produce unique creative effects

How to Mix ControlNets

When mixing multiple ControlNets, each ControlNet influences the image generation process according to its applied area. ComfyUI enables multiple ControlNet conditions to be applied sequentially in a layered manner through chain connections in theApply ControlNet node:

ComfyUI ControlNet Regional Division Mixing Example

In this example, we will use a combination of Pose ControlNet and Scribble ControlNet to generate a scene containing multiple elements: a character on the left controlled by Pose ControlNet and a cat on a scooter on the right controlled by Scribble ControlNet.1. ControlNet Mixing Workflow Assets

Please download the workflow image below and drag it into ComfyUI to load the workflow: Input pose image (controls the character pose on the left):

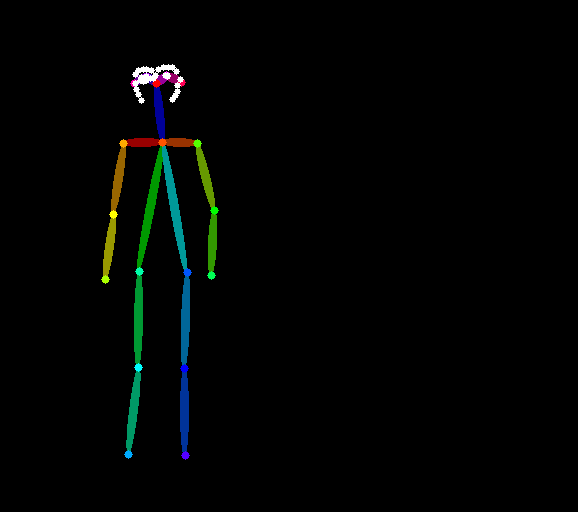

Input pose image (controls the character pose on the left):

Input scribble image (controls the cat and scooter on the right):

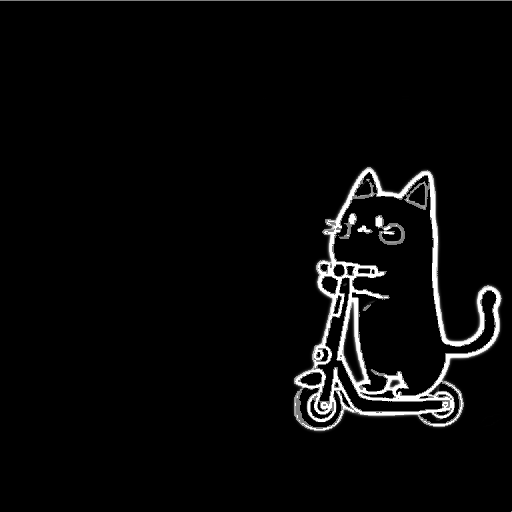

Input scribble image (controls the cat and scooter on the right):

2. Manual Model Installation

If your network cannot successfully complete the automatic download of the corresponding models, please try manually downloading the models below and placing them in the specified directories:

- awpainting_v14.safetensors

- control_v11p_sd15_scribble_fp16.safetensors

- control_v11p_sd15_openpose_fp16.safetensors

- vae-ft-mse-840000-ema-pruned.safetensors

3. Step-by-Step Workflow Execution

- Ensure that

Load Checkpointcan load awpainting_v14.safetensors - Ensure that

Load VAEcan load vae-ft-mse-840000-ema-pruned.safetensors

Load ControlNet Model loads control_v11p_sd15_openpose_fp16.safetensors

4. Click Upload in the Load Image node to upload the pose image provided earlier

Second ControlNet group using the Scribble model:

5. Ensure that Load ControlNet Model loads control_v11p_sd15_scribble_fp16.safetensors

6. Click Upload in the Load Image node to upload the scribble image provided earlier

7. Click the Queue button or use the shortcut Ctrl(cmd) + Enter to execute the image generation

Workflow Explanation

Strength Balance

When controlling different regions of an image, balancing the strength parameters is particularly important:- If the ControlNet strength for one region is significantly higher than another, it may cause that region’s control effect to overpower and suppress the other region

- It’s recommended to set similar strength values for ControlNets controlling different regions, for example, both set to 1.0

Prompt Techniques

For regional division mixing, the prompt needs to include descriptions of both regions:Multi-dimensional Control Applications for a Single Subject

In addition to the regional division mixing shown in this example, another common mixing approach is to apply multi-dimensional control to the same subject. For example:- Pose + Depth: Control character posture and spatial sense

- Pose + Canny: Control character posture and edge details

- Pose + Reference: Control character posture while referencing a specific style